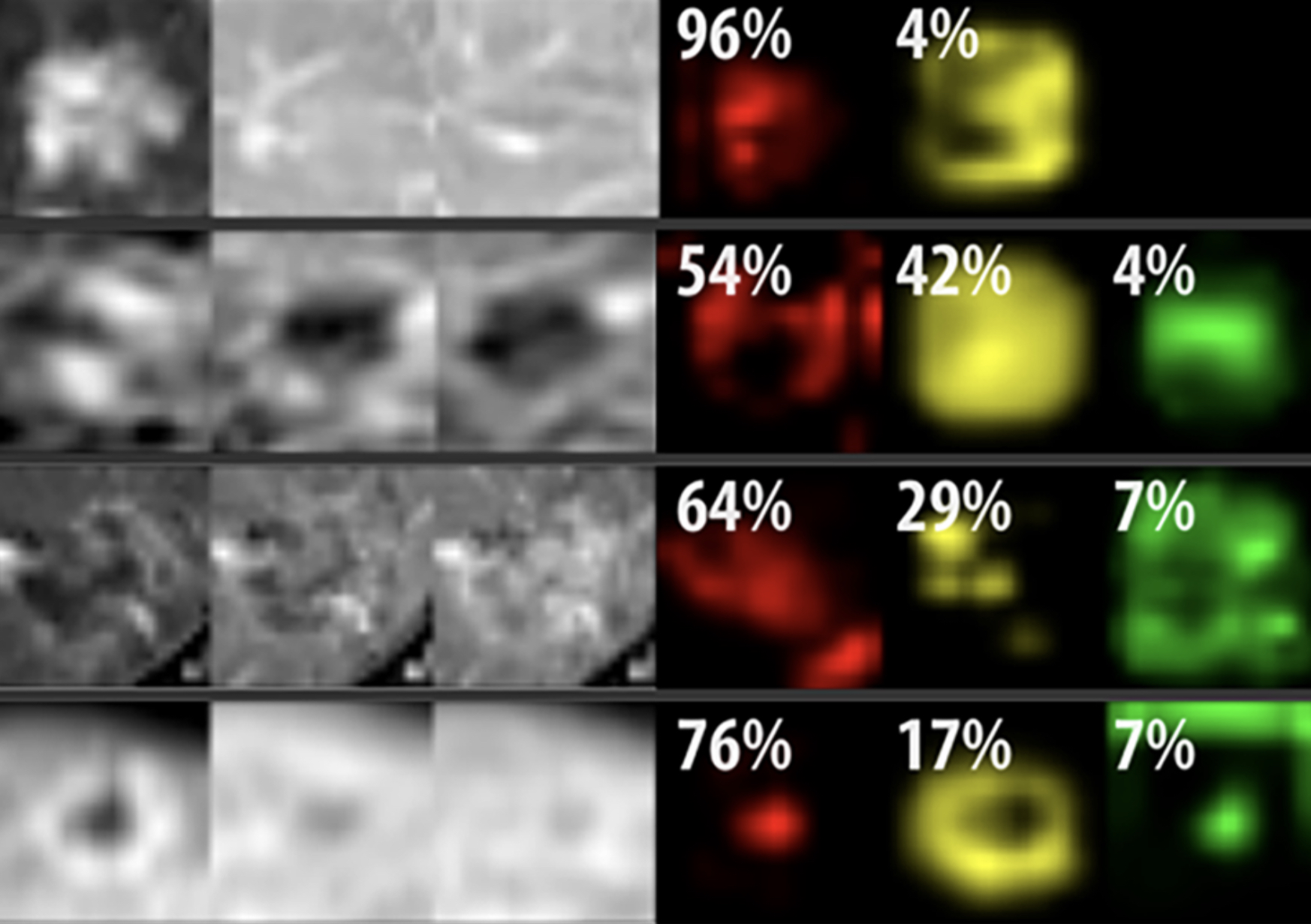

Convolutional neural networks (CNN) have demonstrated the potential to become effective and accurate decision support tools for radiologists. A major barrier to clinical translation, however, is that the majority of such algorithms currently function like a “black box”. After training a CNN with a large set of input and output data, its internal layers are automatically adjusted to mathematically “map” the input to the output. When exposed to new input, CNNs can then output a diagnostic prediction (e.g. “hepatocellular carcinoma”); however, there is usually no way of knowing how the model arrived at a specific decision. How can radiologists know if the model made a mistake with this limited output? In this two-part proof-of-concept study, the authors from the Yale Interventional Oncology group present an “interpretable” deep learning system.

Part I introduces a deep learning system that provides diagnostic decisions for focal liver lesions on multi-phasic MRI exams, taking into account the entire 3D extent of lesions. Part II demonstrates how an existing CNN model can be converted to an “interpretable” system, using the pre-trained model from Part I. By analyzing inner layers of a CNN, it can be inferred which radiological features were used to make a decision, where the model “thought” these features occurred, and the relative importance of these features in the final decision. With future refinement and validation, such methods have the potential to facilitate clinical translation of CNNs as efficiency and decision-support tools for radiologists.

Key Points:

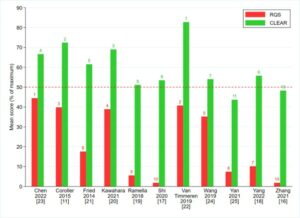

- Deep learning demonstrates high performance and time-efficiency in the diagnosis of liver lesions on volumetric multi-phasic MRI, showing potential as a decision-support tool for radiologists.

- An interpretable deep learning system can explain its decision-making to radiologists by identifying relevant imaging features and showing where these features are found on an image, facilitating clinical translation.

- By providing feedback on the importance of various radiological features in performing differential diagnosis, interpretable deep learning systems have the potential to interface with standardized reporting systems such as LI-RADS, validating ancillary features and improving clinical practicality.

- An interpretable deep learning system could potentially add quantitative data to radiologic reports and serve radiologists with evidence-based decision support.

Articles: Deep learning for liver tumor diagnosis part I: development of a convolutional neural network classifier for multi-phasic MRI; Deep learning for liver tumor diagnosis part II: convolutional neural network interpretation using radiologic imaging features

Authors: Charlie A. Hamm, Clinton J. Wang, Lynn J. Savic, Marc Ferrante, Isabel Schobert, Todd Schlachter, MingDe Lin, James S. Duncan, Jeffrey C. Weinreb, Julius Chapiro, Brian Letzen