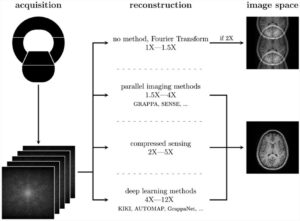

Deep learning has recently pervaded the radiology field, reaching promising results that have encouraged both scientists and entrepreneurs to apply these models to improve patient care.

However, “with great power there must also come — great responsibility” [1]! In most cases, the complexity of deep learning models forces their users, and sometimes also their developers, to treat them as black boxes. Indeed, model opacity represents one of the most challenging barriers to overcome before AI solutions can be fully translated into the clinical settings, where transparency and explainability of diagnostic and therapeutic decisions play a key role.

We know what task the model is performing and, using saliency maps, we may know where the most informative regions of the image are. But being also able to easily explain why the model returns a specific output still remains a challenge.

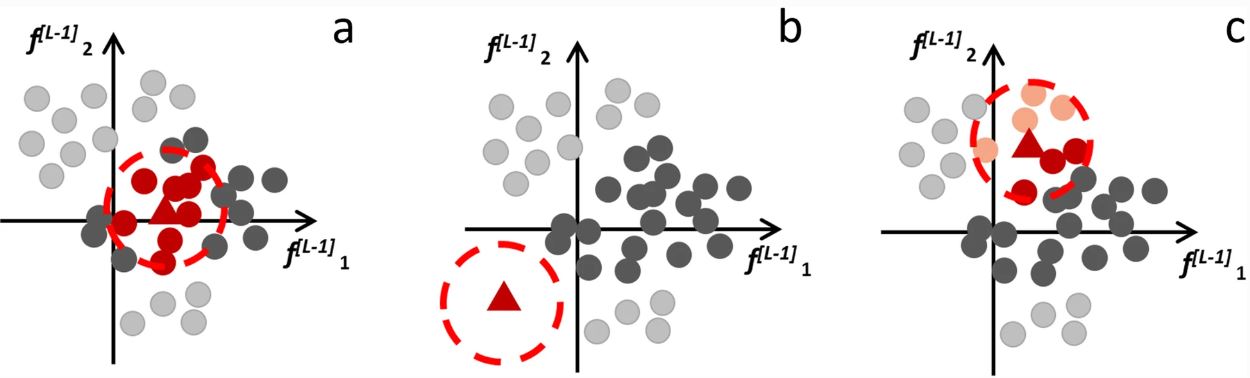

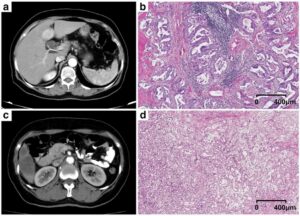

Several researchers are focusing on making AI models more explainable [2,3]. In our article, Prof. Baselli, Prof. Sardanelli and I hypothesized to mimic what the radiologist will do in case of diagnostic uncertainty. We proposed enriching the AI model output with some information about those annotated training cases that are near to the current case in the feature space and are, therefore, “similar” to it. Information provided to the radiologist will vary according to the performed task and may include (without being limited to) original images, ground truth and predicted labels, clinical information, saliency maps, and neighbor density in the feature space.

In our vision, this may represent a user-friendly opportunity to transmit information usually encrypted in the model, hopefully increasing radiologists’ confidence in making their final diagnostic decision. “Summarizing, if a classifier is data-driven, let us make its interpretation data-driven too” [4].

Read more about this topic in the recently published article (link below): “Opening the black box of machine learning in radiology: can the proximity of annotated cases be a way?” [4].

[1] Lee S (1962) Amazing Fantasy #15, Marvel Comics, New York City, USA

[2] Guidotti R, Monreale A, Ruggeri S, Turini F, Pedreschi D, Giannotti F (2018) A survey of methods for explaining black box models. ACM Comput. Surv. 51.5: 1-42.

[3] Arrieta AB, Díaz-Rodríguez N, Del Ser J, et al. (2020) Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. INFORM FUSION 58: 82-115.

[4] Baselli G, Codari M, Sardanelli F. (2020) Opening the black box of machine learning in radiology: can the proximity of annotated cases be a way? European Radiology Experimental 4: 1-7. DOI: 10.1186/s41747-020-00159-0

Key points

- Clinical rules and best practice require diagnosis and therapeutic decision to be transparent and clearly explained.

- Machine/deep learning offers powerful classification and decision tools, though in a hardly explained black box way.

- We propose to present the current case (CC) with training and/or validation data stored in a library of annotated cases (ACs).

- Appropriate metrics in the classification space would yield the distance between the CC and ACs.

- Proximity with similarly classified ACs would confirm high confidence; proximity with diversely classified ACs would indicate low confidence; a CC falling in an uninhabited region would indicate insufficiency of the training process.

Authors: Giuseppe Baselli, Marina Codari & Francesco Sardanelli